The user can specify how many executors should fall on each node through configurations.

#SPARK DRIVER APP SOFTWARE#

Push your guitar playing to the next level with our amplifiers, software & apps.

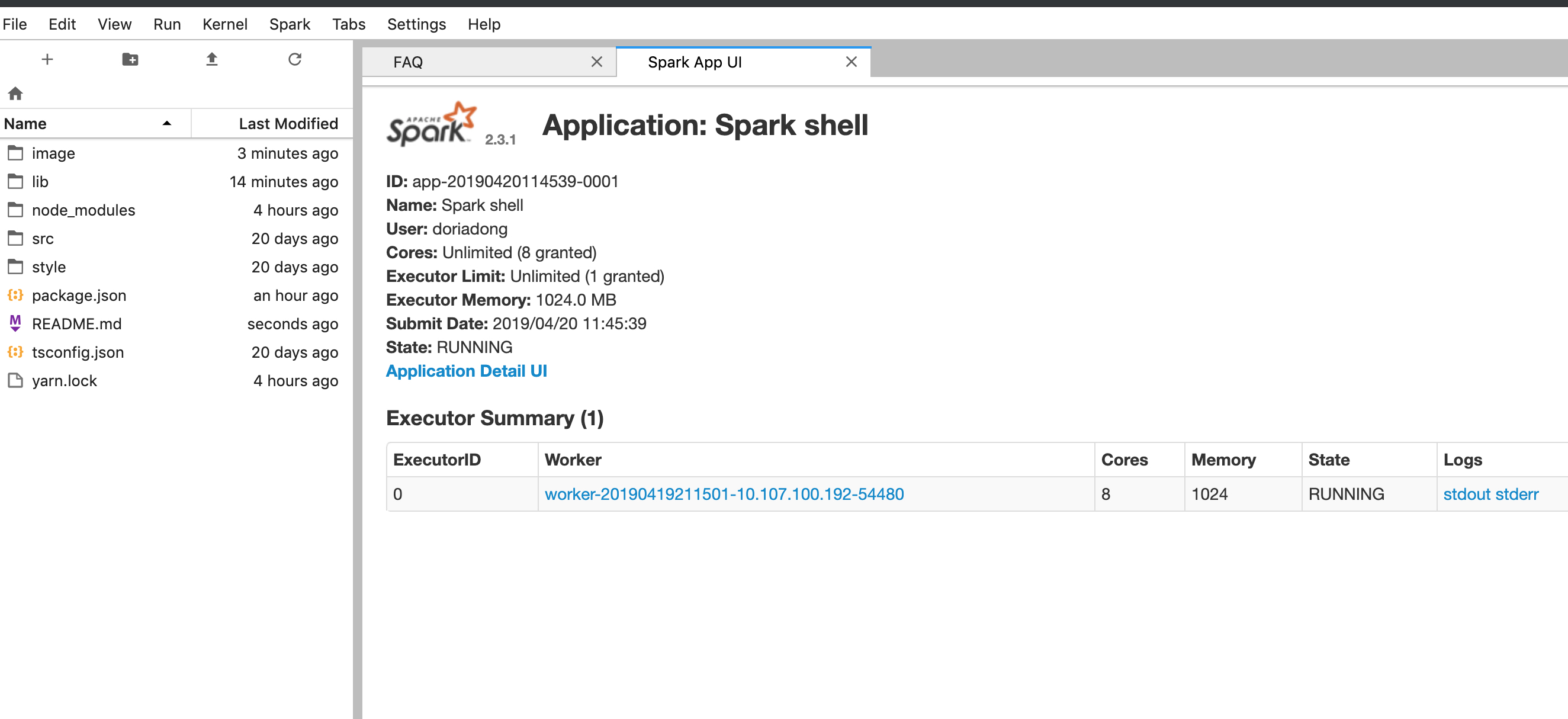

In this diagram, we removed the concept of cluster nodes. Positive Grid is a leading developer of component based guitar amp and effects modeling hardware, desktop plugins, and iOS apps.Positive Grid is where innovative technology meets brilliant music creation. Please check your wifi / mobile data connection and verify that it is working properly. In the previous illustration we see on the left, our driver and on the right the four executors on the right. My Spark Driver app is not updating properly in my phone. We will talk more in depth about cluster managers in Part IV: Production Applications of this book. The application can be filled out and submitted online. below: -What is the Spark Driver application -The Spark Driver application is an application used to apply for a driving position with Spark. enter your name, email address, and password, and. This means that there can be multiple Spark Applications running on a cluster at the same time. open the spark driver and click on the create a new account link.

#SPARK DRIVER APP DOWNLOAD#

init-script logs for debugging Download the Spark Driver App Example Spark. This can be one of several core cluster managers: Spark’s standalone cluster manager, YARN, or Mesos. Posted 16 days ago Spark Delivery Driver If we only go 2 days without. The cluster manager controls physical machines and allocates resources to Spark Applications. to implement because the driver apps are so well designed and customizable.

#SPARK DRIVER APP HOW TO#

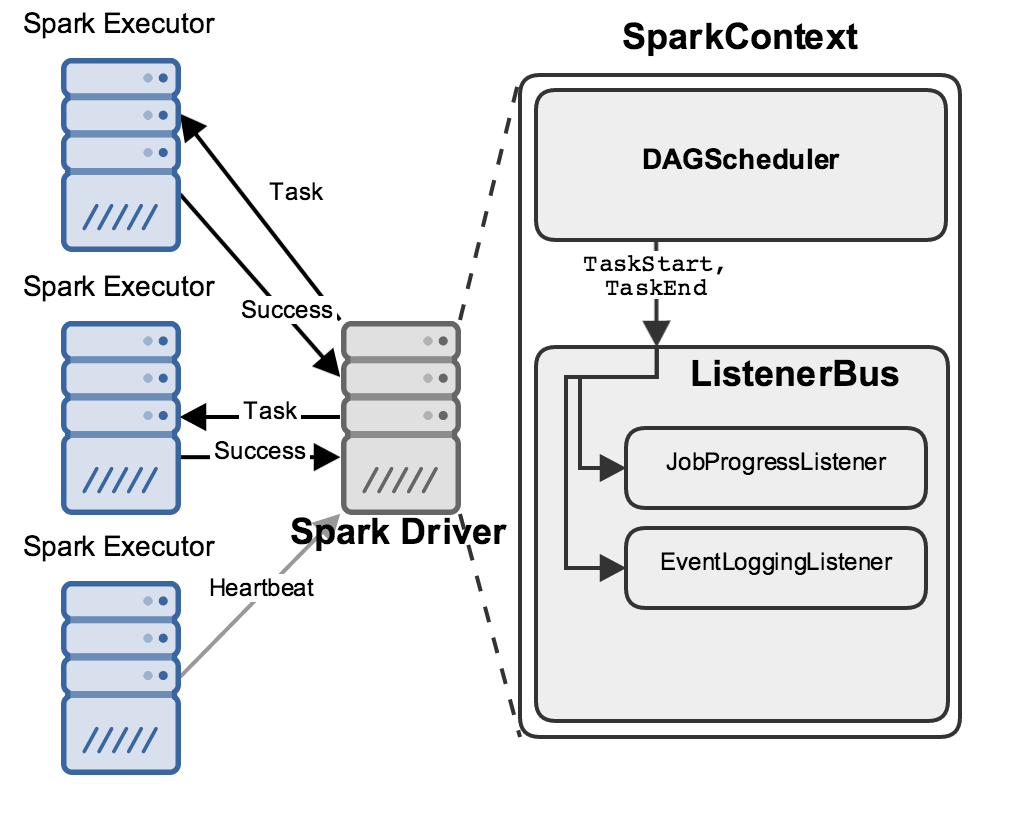

This means, each executor is responsible for only two things: executing code assigned to it by the driver and reporting the state of the computation, on that executor, back to the driver node. Delivery in 4 hours Spark is stealing our tips Complete nonsense how to make. The executors are responsible for actually executing the work that the driver assigns them. The driver process is absolutely essential - it’s the heart of a Spark Application and maintains all relevant information during the lifetime of the application. Through the Spark Driver platform, youll get to use your own vehicle, work when and where you want, and receive 100 of tips directly from customers Join your local Spark Driver community by signing-up on and downloading the Spark Driver App Recent changes: - Bug fixes and Optimizations. The driver process runs your main() function, sits on a node in the cluster, and is responsible for three things: maintaining information about the Spark Application responding to a user’s program or input and analyzing, distributing, and scheduling work across the executors (defined momentarily). In this Apache Spark Tutorial, we learned some of the properties of a Spark Project.Spark Applications consist of a driver process and a set of executor processes. But, if the value set by the property is exceeded, out-of-memory may occur in driver. By sending this information, I authorize Delivery Drivers, Inc. Our soft-body physics engine simulates every component of a vehicle in. Setting it to ‘0’ means, there is no upper limit. Search Social Media Referral Newsletter Other. BeamNG.drive is an incredibly realistic driving game with near-limitless possibilities. Submitted jobs abort if the limit is exceeded. This is the higher limit on the memory usage by Spark Driver. Spark.master=local Driver’s Memory UsageĮxception : If spark application is submitted in client mode, the property has to be set via command line option –driver-memory. But, if the value set by the property is exceeded, out-of-memory may occur in driver.įollowing is an example to set Maximum limit on Spark Driver’s memory usage.Ĭonf.set("", "200m") Setting it to ‘0’ means, there is no upper limit. This is the higher limit on the total sum of size of serialized results of all partitions for each Spark action. Spark.master=local Driver’s Maximum Result Size It represents the maximum number of cores, a driver process may use.įollowing is an example to set number spark driver cores. of duties and responsibilities Walmart Grocery Pickup Download the Spark Driver App Set your own schedule from designated time blocks between 7am-7pm. Spark.master=local Number of Spark Driver CoresĮxception : This property is considered only in cluster mode.

SparkContext sc = new SparkContext(conf) SparkConf conf = new SparkConf().setMaster("local") Ĭonf.set("", "SparkApplicationName") This hook is a wrapper around the spark-submit binary to In September 2017. * Configure Apache Spark Application Name Multiple platforms feature is currently not supported for docker driver. This application name appears in the Web UI and logs, which makes it easy for debugging and visualizing when multiple spark applications are running on the machine/cluster.įollowing is an example to set spark application name :ĪppConfigureExample.java import Part Time Driver Topping the list is New York, with New Hampshire and Arizona close behind in second and third Share The Spark Driver app has a rating. This is the name that you could give to your spark application.

0 kommentar(er)

0 kommentar(er)